Beginning at Nothing towards Hero: Developing a Proxy Maker

In the fast-paced world of data extraction and web scraping, utilizing the appropriate tools at your command can make all the distinction. An essential resource for web scrapers is a robust proxy list generator. Proxies act as middlemen between your scraping tool and the target website, allowing you to overcome restrictions, maintain anonymity, and enhance the efficiency of your data collection initiatives. This article will walk you through the process of creating an optimal proxy list generator, highlighting the essential components such as proxy scrapers, checkers, and verification tools.

With the increasing need for reliable proxies continues to rise, knowing how to properly source and verify both free and paid proxies is a valuable skill. Regardless of whether you wish to scrape data for SEO purposes, automate tasks, or gather insights for research, locating high-quality proxies is crucial. We will explore multiple types of proxies, from HTTP to SOCKS versions, and discuss the variances and best use cases for each. By the end of this article, you will have a comprehensive understanding of how to construct your proxy list generator and leverage the best tools available for effective web scraping.

Understanding Proxies and Their Categories

Proxy servers act as intermediaries connecting a user to the web, allowing inquiries and replies and hiding the user's true identity information. They have a critical role in data scraping, automating tasks, and maintaining privacy on the internet. Through channeling internet traffic through a proxy server, clients can access content that may be restricted in their geographical region and improve their online privacy.

There are types of proxy servers, each cater to different purposes. Hypertext Transfer Protocol proxy servers are uniquely designed for surfing the web, while Socket Secure proxies provide a wider range of protocol features, making them suitable for various kinds of traffic beyond just surfing the web, such as File Transfer Protocol or electronic mail. SOCKS4 and SOCKS5 are a pair of popular versions, with the SOCKS version 5 offering enhanced functionalities like User Datagram Protocol capability and authentication options. Grasping these differences is crucial for selecting the appropriate proxy server for particular purposes.

When it comes to web scraping as well as data extraction, the distinction between private and shared proxy servers is critical. Dedicated proxy servers are allocated to a single client, offering greater degrees of protection and performance, whereas public proxies are shared by many clients, which can result to slower speed and greater risk of getting banned. High-quality proxies can significantly enhance the efficiency of data extraction tools and ensure effective information gathering from multiple sources.

Constructing a Proxy Scraping Tool

Developing a web scraper requires several stages to successfully collect proxy servers from multiple sources. Commence by locating dependable sites that offer no-cost proxy servers, making sure to include a variety of types such as HTTP, SOCKS4, and SOCKS5 proxies. It’s essential to pick sites that frequently refresh their catalogs to confirm the proxy servers are current. Popular sources for gathering proxy servers are discussion boards, API services, and dedicated proxy listing sites.

When you have a catalog of potential sources, you can use programming languages like Ruby to simplify the scraping process. Modules such as BeautifulSoup and Scrapy are great for analyzing Hypertext Markup Language and retrieving data. Write a code that fetches the webpage data of these proxy server directory sources and extracts the proxy details, such as internet protocol address and port. Make sure your scraping tool complies with the web page's terms of service, integrating delays and doing your best to avoid activating anti-bot measures.

Following gathering the proxy information, the next step is to refine the collection by validating the performance of the proxy servers. This is where a proxy checker comes into play. Add functionality in your scraper to validate each proxy’s connectivity, latency, and anonymity. By sending requests through proxy servers and assessing their capabilities, you can eliminate poor proxy servers, ultimately creating a strong list of reliable proxy servers for your information retrieval projects.

Validating and Testing Proxies

Once you have collected a set of proxies, the following crucial step is to validate their performance and operation. A good proxy checker will help you determine if a proxy is operational, reactive, and suitable for your planned use. Proxy testing tools can test several proxies at once, providing you with immediate feedback on their efficiency and dependability. By using a speedy proxy checker, you can swiftly filter out inactive proxies, saving you time and enhancing your scraping efficiency.

Assessing proxy speed is vital for any web scraping activity. It ensures that the proxies you choose can handle the load of your requests without delaying your progress. When assessing proxy performance, consider not just the latency, but also the bandwidth available. The best free proxy checker tools permit you to assess these metrics effectively, helping you to identify the proxies that provide highest performance for your individual needs, whether you are collecting data or performing SEO research.

One more crucial aspect to consider is the privacy level of the proxies in your set. how to scrape proxies for free designed to evaluate proxy anonymity can help you identify if a proxy is open, hidden, or high-quality. This classification is vital depending on the type of your project; for example, if you have to evade geographical restrictions or avoid detection by online platforms, using high-concealment proxies will be helpful. Understanding how to assess if a proxy is working under various scenarios further aids in maintaining a reliable and efficient scraping approach.

Best Options for Proxy Harvesting

Regarding proxy scraping, choosing the best tools can greatly enhance your effectiveness and effectiveness. One of the leading options is ProxyStorm, known for its consistency and quickness. This tool features a user-friendly interface and allows the scraping of both HTTP and SOCKS proxy types. With its sophisticated features, users can easily simplify the workflow of gathering free proxies, guaranteeing they have a fresh list ready for web scraping.

Another superb choice is a complimentary proxy scraper that enables users to acquire proxies without spending a dime. Tools like these often feature with native verification capabilities to assess the viability of the proxies collected. They can reduce time and deliver a steady flow of usable IP addresses, making them an perfect option for those just starting or with limited funds. Additionally, features such as sorting proxies based on region or level of anonymity can further enhance the user experience.

For serious web scrapers, combining different tools can result in better results. Fast proxy scrapers that focus on efficiency and effectiveness paired with high-quality proxy checkers can help users harvest and verify proxies faster than ever before. By using these resources, web scrapers can keep a robust pool of proxies to support their automation and data extraction efforts, making sure that they have access to the top proxy sources for their particular needs.

Best Sources for Free Proxies

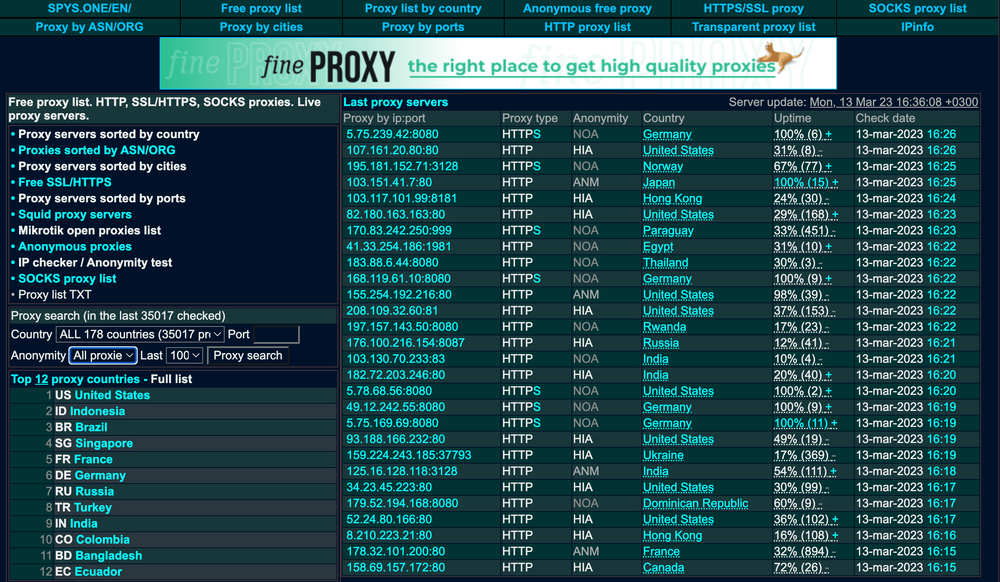

When searching for free proxies, a great way to find them is utilizing online proxy lists and directories. Websites such as Free Proxy List, Spys.one, and ProxyScrape maintain extensive and current databases of free proxies. These websites organize proxies based on various parameters like speed, anonymity level, and type, such as HTTP or SOCKS. By using these resources, users can quickly discover proxies that fulfill their specific needs for web scraping or browsing while still being affordable.

Another great source for free proxies is community-driven platforms that allow users to share their own proxy discoveries. Forums like Reddit or specialized web scraping communities often feature threads dedicated to free proxy sharing. Engaging with these communities offers new proxy sources but also allows users to receive immediate feedback on proxy quality and performance. This interactive approach can help eliminate ineffective proxies and highlight superior options.

In conclusion, utilizing web scraping tools specifically designed to gather proxies can be a game-changer. Tools like ProxyStorm and dedicated Python scripts can simplify the process of scraping free proxies from different sources. By running these scripts, users can create fresh proxy lists tailored to their requirements. Additionally, such tools frequently include features for checking proxy performance and anonymity, making them crucial for anyone looking to effectively collect and check proxies for web scraping tasks.

Utilizing Proxy Servers for Web Scraping and Automation

Proxy servers play a crucial role in web scraping and automated tasks by allowing access to specific websites while simulating multiple IP addresses. This feature is essential for overcoming rate limits and preventing IP bans that can occur when scraping data intensively. By rotating through a set of proxy servers, scrapers can maintain a consistent flow of requests without alerting red flags. This allows for more effective data collection from different sources, crucial for businesses that depend on up-to-date information from the web.

In addition to preventing restrictions, proxies can help maintain confidentiality and protection when conducting data extraction. Using residential or exclusive proxies can hide the original IP address, making it challenging for websites to monitor the origin of the requests. This anonymity is especially important when scraping private information or rivaling with other scrapers. Moreover, employing proxy servers can allow access to region-locked content, increasing the range of data that can be scraped from multiple regions and areas.

When performing automated tasks using proxy servers, it is important to choose the suitable types of proxy servers based on the specific use case. Web proxies are suitable for standard data extraction tasks, while SOCKS5 proxies offer greater versatility and support for multiple protocols. Many web scraping tools come bundled with integrated proxy support, making it easier to manage and control proxy rotations. By leveraging the right proxy choices, users can enhance their data extraction efficiency, increase success rates, and optimize their automation processes.

Tips for Finding High-Quality Proxies

As you are looking for high-quality proxies, it's important to focus on reliable sources. Seek out recommended proxy services that focus on selling home and dedicated proxies as they usually offer better reliability and anonymity. Online forums and networks focused on web scraping can also offer valuable insights and advice for reliable proxy services. Be wary of complimentary proxy lists, as they often include slow proxies that can impede your web scraping operations.

Verification is important in your pursuit for top-notch proxies. Use a reliable proxy checker to test the speed, anonymity, and location of various proxies. This will aid you filter out proxies that do not meet your standards. Additionally, be sure to use proxies that are compatible with popular standards like HTTP or SOCKS5, as they provide superior functionality for different web scraping applications and processes.

Lastly, keep an eye on the proxy's uptime and response time. A proxy with a good uptime ensures consistent access, while reduced latency provides speedier response times, which is critical for web scraping. Periodically revisit your proxy registry to ensure you are using the best proxies on the market. By integrating these approaches, you can markedly improve your likelihood of locating the top-tier proxies essential for successful web scraping.